October 3, 2025

In Parts 1 through 3, we built a sophisticated industrial AI agent that can access real-time equipment data from an OPC UA server, query product recipes from a TimescaleDB database, and search through maintenance documents using agentic RAG. But there's a fundamental scalability problem lurking beneath this impressive functionality.

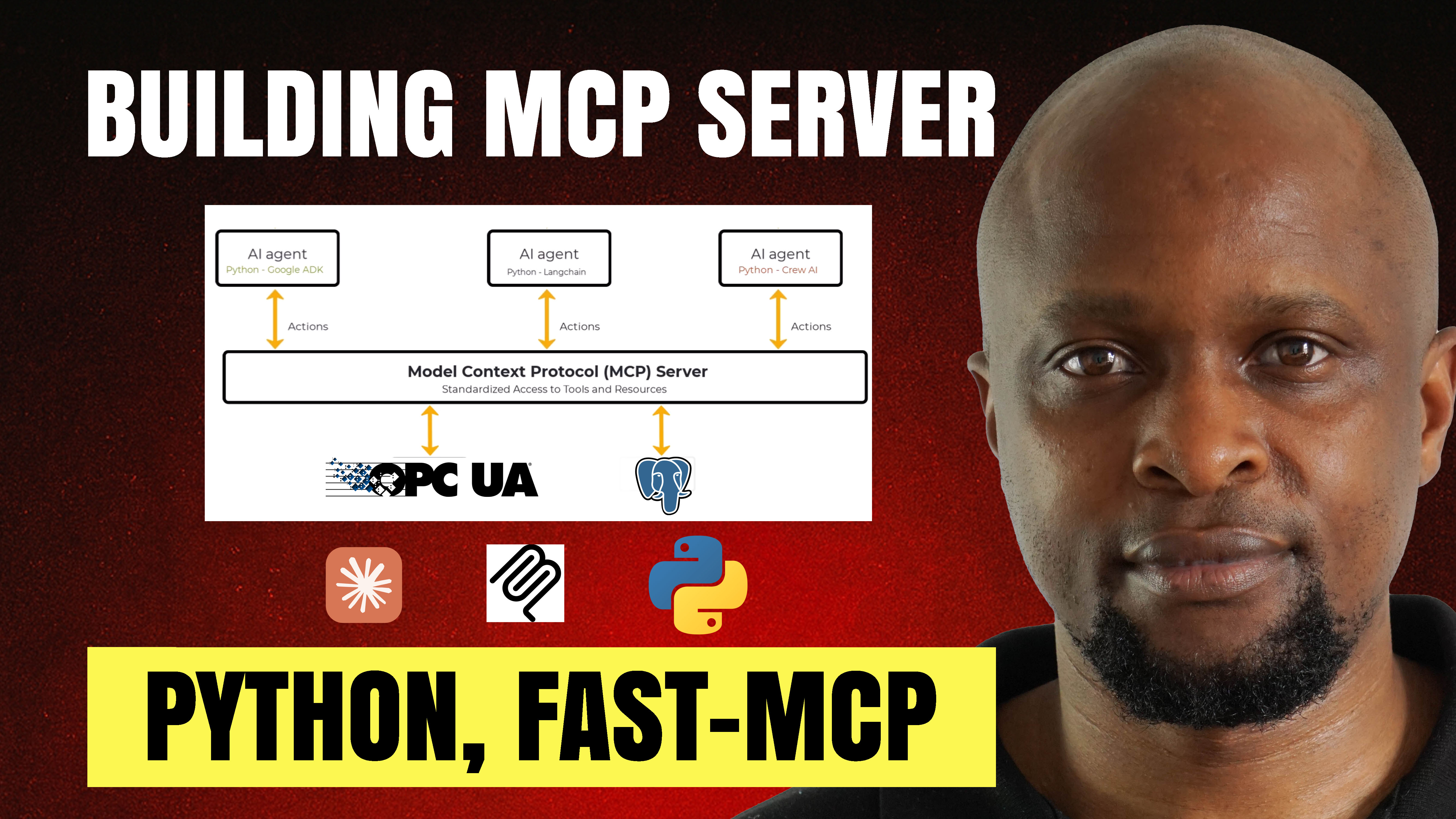

We created these capabilities by manually mapping Python functions into LangChain tools. That works perfectly fine for one agent built with one framework. But what happens when you need to build a second AI agent using a different framework? Perhaps your organization wants to experiment with the Google Agent Development Kit, or another team is building an agent using Crew AI, and these agents also need access to the same OPC UA server, database, and maintenance documents.

With the current approach, you'd have to rebuild and remap all the tools for each framework. You'd write the same OPC UA integration again, reimplement the database queries again, recreate the RAG pipeline again—each time in slightly different ways to match each framework's specific requirements. A third agent means doing it all a third time. The result is multiple AI agents accessing the same data sources, but each through custom, one-off integrations that you have to maintain separately.

This clearly doesn't scale, and that's exactly what we're solving in this tutorial.

What We're Building

This is Part 4 of our five-part series on building agentic AI for industrial systems. In this tutorial, we're building a Model Context Protocol (MCP) server that standardizes how AI agents access industrial data and tools. With MCP, we build the integration once and it works with any AI agent from any framework, eliminating the constant reinvention of integration logic.

The concept is elegant in its simplicity. Instead of building custom connections every time a new agent needs access to your industrial data, you create a standardized server that exposes tools and data in a consistent way. Any MCP-compatible AI agent can then connect to this server and immediately access all the capabilities it provides, regardless of what framework the agent was built with.

For this tutorial, we're wrapping our OPC UA server with an MCP server, creating a standardized interface that allows any agent to interact with our batch plant equipment data. The same approach applies to databases, RAG pipelines, or any other industrial data source or tool you want to make available to AI agents.

Understanding the Model Context Protocol

MCP is an open protocol that standardizes how LLM-powered AI applications connect to tools and data sources. Think of it as defining a common language that all AI agents can speak when they need to access external capabilities. Rather than each framework inventing its own way of connecting to data sources, MCP provides a universal standard.

The protocol follows a client-server architecture with three main participants. The MCP host is an AI application or agent that manages one or more clients. The MCP client maintains a dedicated one-on-one connection with an MCP server and retrieves context on behalf of the host. The MCP server provides tools, resources, and prompts to MCP clients. In practice, a single host can connect to multiple servers, with each client managing its own server connection.

The foundation of MCP is its primitives, which are essentially standardized building blocks for communication. Server-side primitives define what the server exposes to clients. Tools are executable functions that an MCP client can call, such as file operations, database queries, or API calls—in our case, reading OPC UA tags or querying equipment states. Resources are contextual data sources that may be of interest to clients, like OPC UA tag lists, database records, or API responses. Prompts are reusable prompt templates that clients can utilize to get the best results from what the server exposes, such as system prompts or few-shot examples.

Client-side primitives define what clients expose to servers. Sampling allows servers to request model completions from whatever LLM the client is using, keeping the MCP server model-agnostic. For example, an SQL access MCP server can ask the client to generate queries from natural language while staying completely independent of any specific language model. Elicitation allows servers to request more input or confirmation from end users when needed. Logging enables servers to push log messages to clients for monitoring and debugging purposes.

MCP supports two transport mechanisms for communication. Standard input/output transport enables local process-to-process communication, perfect for agents running on the same machine as the server. HTTP transport uses HTTP POST for requests and optionally HTTP Server-Sent Events for streaming responses, enabling remote access when needed.

What the Tutorial Covers

The full video tutorial walks through building a complete MCP server for industrial data from scratch. We start with an existing Python script that acts as an OPC UA client connected to a simulated batch plant OPC UA server. This script has two key functions—one that reads tank levels to tell us how much material is available for production, and another that reads the operational states of all machines. You'll see this script in action, pulling real-time values that match what's displayed in the Ignition SCADA visualization.

Then we transform these OPC UA data access functions into MCP tools using the FastMCP Python package, which provides a high-level interface for building MCP servers quickly and efficiently. The transformation process is remarkably simple—we initialize an MCP server, decorate our existing Python functions with the MCP tool decorator, and specify the transport mechanism. That's essentially it. The functions we've already written become standardized tools that any MCP-compatible agent can access.

The tutorial demonstrates how to set up the development environment using UV, a modern Python package manager that simplifies dependency management and virtual environment creation. We install the necessary packages including AsyncUA for OPC UA communication and the MCP package for server functionality. Then we modify our existing Python script by adding just a few lines of code—importing the MCP server, initializing it, decorating our functions, and specifying the transport.

Finally, we test the MCP server using MCP Inspector, a browser-based environment that allows you to explore MCP tools, resources, and prompts interactively. You'll see how to start the server, connect to it through the inspector, list the available tools, and actually execute them to retrieve live data from the OPC UA server. The inspector provides a visual way to verify that your MCP server is working correctly before connecting actual AI agents to it.

What You'll Learn

By following this tutorial, you'll understand what the Model Context Protocol is and why it matters for building scalable industrial AI systems. You'll see firsthand how MCP solves the integration multiplier problem—the exponential growth in effort required when you need multiple agents accessing multiple data sources without a standardized protocol.

You'll learn the architecture of MCP servers and the role of primitives in standardizing communication between agents and data sources. The tutorial demonstrates the practical process of converting existing Python functions into MCP tools, showing you that creating standardized integrations doesn't require rewriting your existing code. It's about wrapping what you already have in a standardized interface.

You'll gain hands-on experience with FastMCP, which dramatically simplifies the process of building production-ready MCP servers. The tutorial shows you how minimal the code changes are—often just adding a few decorators and initialization lines to existing functions. This low barrier to entry means you can start standardizing your industrial data access immediately without major refactoring.

Perhaps most importantly, you'll understand how to think about building reusable, framework-agnostic integrations. This mindset shift is crucial as AI agents become more prevalent in industrial operations. Rather than building point-to-point integrations between each agent and each data source, you build standardized servers that any agent can use. This architectural approach scales linearly instead of exponentially as your AI ecosystem grows.

Watch the Full Tutorial

The complete video tutorial provides a detailed, step-by-step walkthrough of building an MCP server for industrial data. You'll see the actual code, the server initialization, the tool decoration process, and live testing using MCP Inspector showing real data flowing from an OPC UA server through the MCP interface.

The Multi-Agent Challenge

With our MCP server, we've solved a key challenge—giving AI agents standardized access to industrial data through reusable tools. Any agent that speaks MCP can now connect to our OPC UA server without custom integration work. But there's still one more challenge ahead, and it's one that becomes increasingly important as industrial AI systems mature.

What happens when you have multiple AI agents in your system that need to not only access tools but also communicate and exchange information with each other in a standardized way? Imagine a predictive maintenance agent that detects potential equipment issues and needs to communicate with a production scheduling agent to coordinate maintenance windows. Or a quality control agent that identifies defects and needs to share findings with a root cause analysis agent.

Without a standardized protocol for agent-to-agent communication, you're back to building custom integrations between each pair of agents that need to interact. The complexity grows exponentially with each new agent added to the system. You need a way for agents to discover each other, understand what capabilities each agent provides, and exchange information reliably.

That's where the Agent-to-Agent (A2A) communication protocol comes in. Part 5 of this series will show you how to build a multi-agent industrial AI system and implement the A2A protocol to enable seamless communication and collaboration among your AI agents, creating a truly integrated intelligent system.