October 3, 2025

The AI agent we built in Parts 1 and 2 works well—it connects to real-time equipment data through an OPC UA server, queries product recipes from a TimescaleDB database, and makes intelligent go/no-go decisions about production runs. But there's a critical gap in this system, and it's the kind of gap that can lead to costly mistakes in real industrial environments.

What about all the information that isn't in the real-time sensors or structured databases? What about the maintenance schedules sitting in Excel files, the calibration certificates in PDFs, the equipment inspection reports, and the spare parts inventory lists? This is where some of the most critical production constraints live, and our current agent is completely blind to them.

That's exactly what we're solving in this tutorial.

What We're Building

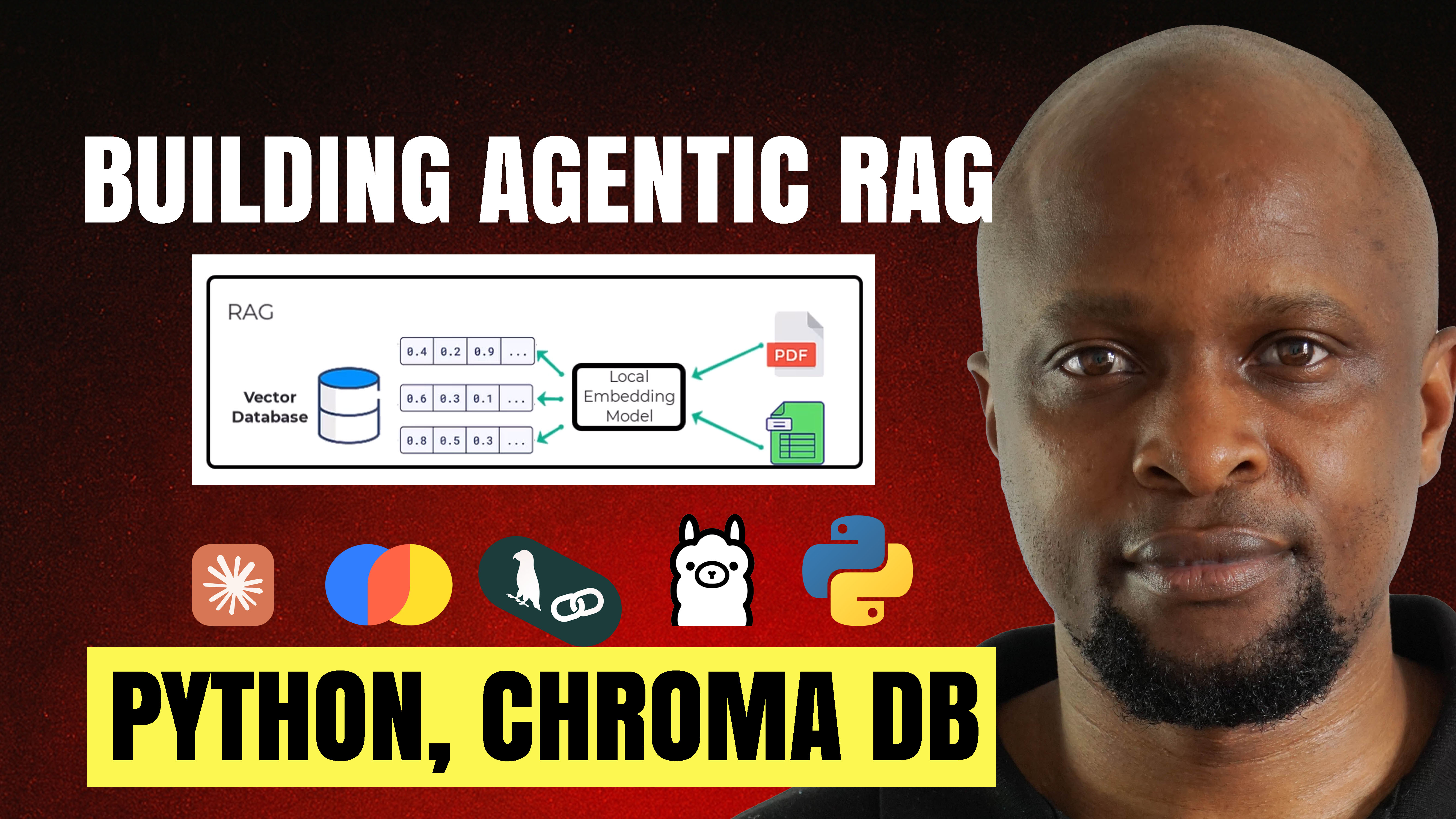

This is Part 3 of our five-part series on building agentic AI for industrial systems. In this tutorial, we're building an agentic RAG (Retrieval-Augmented Generation) pipeline that allows our AI agent to access and reason over unstructured maintenance documents alongside the real-time operational data it already has access to. The result is an agent that can make genuinely informed decisions by considering the complete operational picture, not just the structured data.

Imagine asking your agent: "Can we produce five batches of Product A tomorrow?" Right now, it checks material levels and equipment states and says yes if everything looks good. But what if there's scheduled maintenance on the reactor tomorrow morning? What if a critical calibration certificate expires tonight? What if recent vibration readings suggest the mixer is degrading? Without access to these documents, the agent might confidently approve production that should never have been started.

With agentic RAG, the agent gains the ability to search through maintenance schedules, inspection reports, calibration records, and equipment logs to surface this critical context before making decisions. It's the difference between an agent that knows what's happening right now versus one that understands the full operational context.

Why Agentic RAG Matters

There are two main approaches to implementing RAG systems, and understanding the difference is crucial. Naive RAG automatically searches for relevant documents and force-feeds that context to the language model with every single query. It's like handing someone a stack of potentially relevant documents every time you ask them a question, whether they need them or not. This approach wastes computational resources, adds latency, and often clutters the model's context with irrelevant information.

Agentic RAG takes a fundamentally different approach. Instead of forcing context onto the agent, we provide the RAG system as a tool that the agent can choose to use. The agent decides if and when it needs to search the maintenance documents based on the actual query. If someone asks about current tank levels, the agent doesn't waste time searching maintenance records—it just queries the OPC UA server. But if someone asks about production feasibility tomorrow, the agent recognizes it should check maintenance schedules and equipment reliability reports before making that call.

This isn't just an efficiency improvement. It's about building agents that reason about what information they need, rather than blindly processing whatever we shove at them. In industrial environments where decisions have real consequences and systems need to operate reliably at scale, this distinction becomes critical.

The Technical Architecture

The complete agentic RAG pipeline we're building involves several interconnected components working together. We start with maintenance documents—in the tutorial, these are markdown files containing maintenance schedules, equipment health scores, inspection results, vibration measurements, and spare parts inventory. In production environments, these would likely be Excel spreadsheets, PDFs, Word documents, or data exported from your CMMS (Computerized Maintenance Management System).

These documents need to be converted into a format that enables semantic search, which means converting text into vectors—essentially lists of numbers that mathematically represent the meaning of the content. We use an embedding model for this conversion, and importantly, we're running this model locally using Ollama rather than relying on cloud APIs. This maintains the data privacy and edge deployment benefits we established in Part 2.

The vectors are stored in Chroma, an open-source vector database that makes it fast and efficient to find semantically similar content. When the agent needs information, it converts its query into a vector using the same embedding model, then searches the database for the most similar document chunks. This semantic search is far more powerful than simple keyword matching—it understands meaning and context, not just exact word matches.

But here's where our implementation goes beyond basic RAG. Rather than just providing a generic document search tool, we've created specialized tools that extract specific decision-critical information. The agent has tools to check maintenance schedules for conflicts, verify calibration status of critical equipment, and assess equipment reliability based on recent inspection data. These tools bridge the gap between raw document search and actionable intelligence, giving the agent exactly the information it needs in a format optimized for decision-making.

What the Tutorial Covers

The full video tutorial walks through the complete process of building this agentic RAG pipeline from scratch. We start by installing and configuring Ollama to run a local embedding model, showing you how to download the model and verify it's working correctly on your machine. This is important because it keeps all your sensitive maintenance data local rather than sending it to external APIs.

Then we dive into building the RAG pipeline itself. You'll see how to load maintenance documents from a folder, split them into appropriately sized chunks for semantic search, convert those chunks into vectors using the local embedding model, and store everything in the Chroma vector database. The tutorial explains why chunking matters—long documents contain too many different topics, making semantic search less precise. By splitting documents into smaller chunks with overlapping content, we ensure we don't lose context at boundaries while maintaining search precision.

Next, we build the semantic search functionality and wrap it in specialized tools. Rather than giving the agent a generic "search documents" tool, we create tools like "check maintenance schedule," "check calibration status," and "check equipment reliability." Each tool constructs targeted queries, performs the semantic search, and returns formatted results that directly answer specific production-relevant questions. This design makes the agent far more effective at extracting actionable intelligence from documents.

The tutorial then shows how to integrate these RAG tools into our existing LangChain agent alongside the tools for accessing OPC UA and database data. We update the agent's prompt to explain when and how to use these new capabilities, adding production rules like "Product A cannot be produced within 24 hours after reactor maintenance." Finally, we test the complete system and watch as the agent orchestrates calls across real-time sensors, databases, and maintenance documents to make fully-informed production decisions.

What You'll Learn

By following this tutorial, you'll understand the fundamental concepts of RAG and why it's essential for industrial AI applications that need to reason over both structured and unstructured data. You'll learn the critical difference between naive RAG and agentic RAG, and why giving agents the choice of when to retrieve context leads to more efficient, more intelligent systems.

You'll gain hands-on experience with the core technologies of modern RAG pipelines—embedding models for converting text to vectors, vector databases for semantic search, and document chunking strategies for optimal retrieval. Importantly, you'll see how to run all of this locally using Ollama and Chroma, maintaining the edge deployment and data privacy benefits that industrial applications require.

Perhaps most valuable is understanding how to design RAG tools that provide actionable intelligence rather than just raw document snippets. The tutorial demonstrates the thought process of identifying what decision-critical information your agent needs and building specialized retrieval functions that extract exactly that information. This is the difference between an agent that can find documents and an agent that can make decisions based on documents.

You'll also see the reality of building these systems—including the challenges of ensuring agents handle missing documents appropriately and the importance of robust prompt engineering to guide agent behavior. The tutorial shows both the successes and the edge cases, giving you realistic expectations for deploying these systems in production.

Watch the Full Tutorial

The complete video tutorial provides a detailed, step-by-step walkthrough of building an agentic RAG pipeline for industrial data. You'll see the actual code, the document loading and embedding process, the semantic search in action, and the complete agent making production decisions that consider both real-time data and maintenance documentation.

The Scalability Challenge

The agentic RAG pipeline we build is powerful and production-ready for a single agent. But there's a significant challenge lurking beneath the surface. We've created tools for our agent by wrapping Python functions and mapping them as custom LangChain tools. That works fine for one agent built with one framework, but what happens when you need to build another AI agent using a different framework?

Imagine your organization decides to build another agent using the Google Agent Development Kit, or one using the Crew AI framework, and these agents also need access to the same OPC UA server, TimescaleDB database, and maintenance documents. With the current approach, you'd have to rebuild and remap the tools for each framework. You'd be writing the same integrations over and over again in slightly different ways for each framework's specific requirements.

This clearly doesn't scale. As AI agents become more prevalent in industrial operations, and as different teams adopt different frameworks and tools, we need a standardized way for agents to access data and tools. We need to build the integration once and have it work across any agent from any vendor without rewriting code.

Part 4 of this series addresses exactly this challenge by introducing the Model Context Protocol (MCP). With MCP, you can standardize how industrial AI agents access data and tools, creating reusable integrations that work seamlessly across frameworks and platforms. It's the missing piece that makes industrial AI truly scalable across the enterprise.