October 3, 2025

In Parts 1 through 4, we built a sophisticated industrial AI agent that can access real-time equipment data from an OPC UA server, query databases for product recipes, search through maintenance documents using agentic RAG, and expose all these capabilities through a standardized MCP server. But we've been building everything as a single, monolithic agent—one system doing everything. And while that works, it's not how complex industrial operations actually function.

Real industrial facilities don't have one person who knows everything about equipment, materials, recipes, maintenance schedules, and production planning. They have specialists. You have operators who understand equipment intimately, planners who know material requirements and recipes, maintenance technicians who track equipment health, and supervisors who coordinate across all these domains. Each brings deep expertise in their area, and they communicate through standardized protocols and procedures to make decisions collaboratively.

That's exactly what we're building in this tutorial—and it's the culmination of everything we've learned in this five-part series.

What We're Building

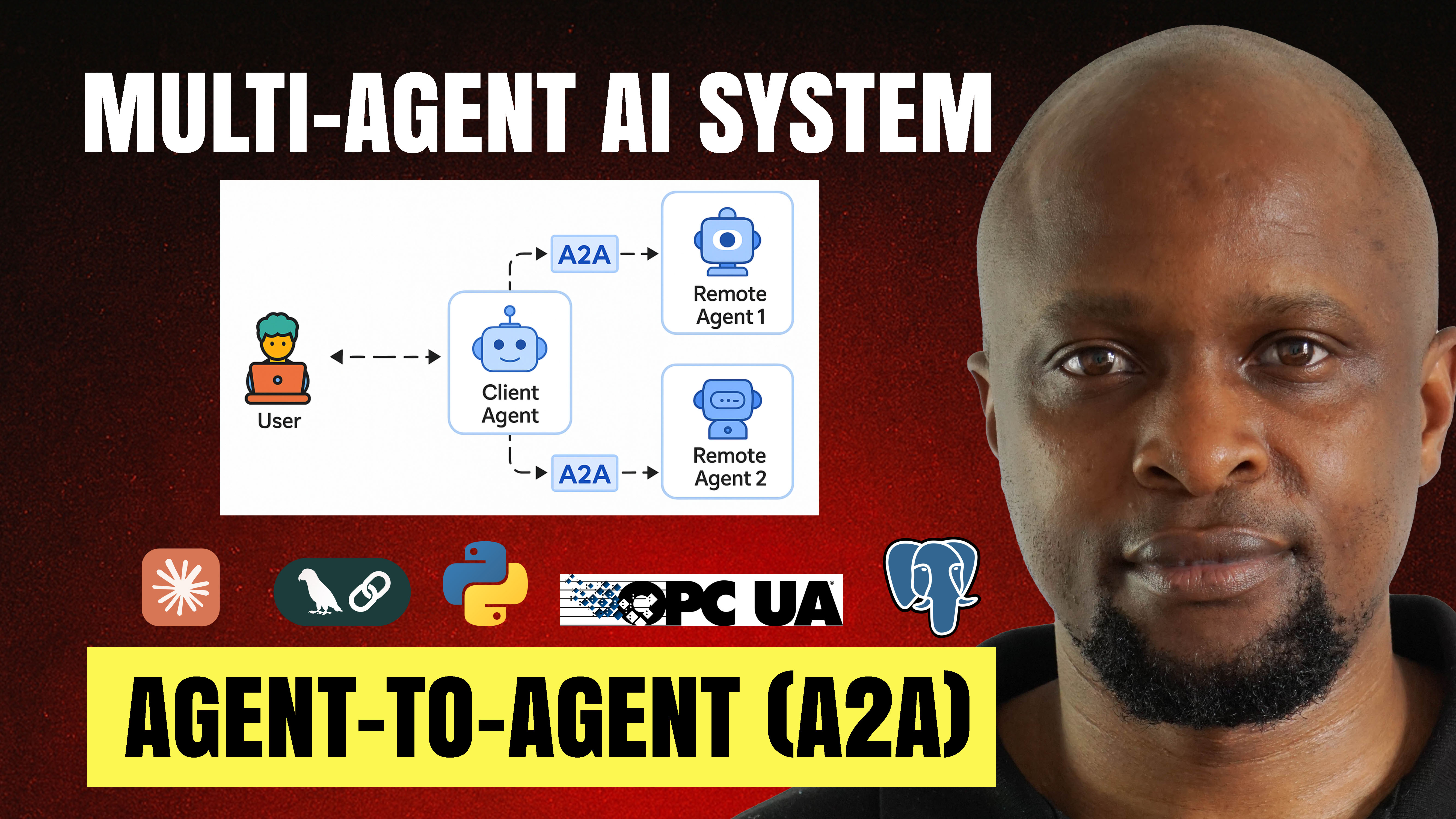

This is Part 5, the final installment of our series on building agentic AI for industrial systems. In this tutorial, we're decomposing our monolithic AI agent into a multi-agent system where specialized agents communicate through the Agent-to-Agent (A2A) protocol. Instead of one agent trying to do everything, we create three specialized agents that collaborate to make production decisions.

The equipment monitoring agent specializes in real-time data, connecting to our OPC UA server to provide authoritative information about tank levels, machine states, and operational statuses. The material calculating agent specializes in product knowledge, connecting to our TimescaleDB database to understand product recipes, material requirements, and batch calculations. The orchestrator agent coordinates the other two, knowing which specialist to consult for different types of information and how to combine their insights into final decisions.

These agents don't need to know each other's internal implementation details. They don't care whether another agent uses LangChain or a different framework, whether it runs locally or in the cloud, or what language model powers its reasoning. They communicate through the A2A protocol using standardized messages, creating a system that's far more flexible and maintainable than tightly-coupled integrations.

Why Multi-Agent Systems Matter

The monolithic approach we used in earlier parts works fine for simple scenarios, but it has fundamental limitations that become apparent as systems grow in complexity. When one agent handles everything, it needs access to all data sources, must understand all business logic, and becomes a single point of failure. Adding new capabilities means modifying the core agent, which increases complexity and risk. Different teams can't work on different aspects independently because everything is interconnected in one codebase.

Multi-agent systems solve these problems through specialization and standardization. Each agent becomes an expert in its domain, which actually improves decision quality because specialized agents can be optimized for their specific tasks. You can scale different agents independently based on load—if equipment monitoring requires more resources than material calculations, you can deploy more instances of just that agent. Teams can develop and deploy agents independently as long as they adhere to the communication protocol. And critically, you can replace or upgrade individual agents without touching the others.

This architectural approach also mirrors how organizations actually work, making the system more intuitive to understand and maintain. When operators see that there's an equipment monitoring agent and a material calculating agent, they immediately understand the division of responsibilities. This isn't just good engineering—it's building AI systems that align with how people already think about industrial operations.

Understanding the Agent-to-Agent Protocol

The Agent-to-Agent protocol is a standardized framework that enables AI agents from different vendors, using different models, built with different frameworks, to communicate and collaborate seamlessly. Think of it as a universal language for AI agents. Without A2A, agents built with LangChain, AutoGen, and Crew AI can't semantically interoperate with each other because each uses its own communication format. A2A provides the common language they all understand.

The protocol follows a request-response model similar to how web APIs work. A client agent sends a request and a server agent responds, but here's the interesting part—any agent can be both a client and a server. Your equipment monitoring agent serves data when asked, but it might also need to request information from other agents for its own purposes. This flexibility enables rich collaboration patterns beyond simple hierarchical structures.

A2A defines five core capabilities that make this interoperability possible. First, standardized message formats ensure all communication uses JSON-RPC 2.0 over standard protocols like HTTP or gRPC, meaning agents exchange structured, typed data that any system can understand. Second, dynamic discovery through agent cards allows each agent to publish a description of its capabilities—essentially a business card that says "I'm the equipment monitoring agent, I can provide tank levels and machine states, and here's how to reach me." Other agents can discover these cards and automatically know what capabilities are available without manual configuration.

Third, built-in task management provides orchestration capabilities so agents can communicate work status and provide progress updates. Fourth, multimodal data exchange means agents aren't limited to text—they can exchange sensor data, graphs, maintenance reports, even video feeds from inspection cameras. Finally, security and asynchronous communication include encryption for sensitive industrial data and support for real-time streaming updates using Server-Sent Events, which is essential for industrial applications with continuous data flows.

The Multi-Agent Architecture

The architecture we build demonstrates how these concepts work in practice. When a user asks "Can we produce four batches of Product A?" the request goes to our orchestrator agent, which exposes an A2A server endpoint. The orchestrator maintains an agent registry listing all agents it can reach via A2A. Using this registry, it discovers each agent's capabilities by reading their agent cards from well-known endpoints. It knows the equipment monitoring agent can provide material availability and machine status information, and it knows the material calculating agent can provide production requirements.

The orchestrator delegates tasks based on what's needed. It might ask the material calculating agent "What materials are needed for four batches of Product A?" while simultaneously asking the equipment monitoring agent "What are the current tank levels and machine states?" Each specialist agent exposes an A2A server endpoint, so to communicate with them, the orchestrator runs its own A2A client connections. The equipment monitoring agent connects to the OPC UA server and retrieves real-time data, while the material calculating agent queries the database and calculates requirements. They respond to the orchestrator with structured data through A2A.

The orchestrator combines these insights, applies business logic like checking if materials are sufficient and machines are operational, and provides the final answer to the user. Throughout this entire workflow, no agent needs to know the implementation details of any other agent. They're communicating through a standardized protocol that could work just as well if we replaced the equipment monitoring agent with one built on a completely different framework.

What the Tutorial Covers

The full video tutorial walks through building this complete multi-agent system from scratch. We start by understanding the project structure, which is organized into three main directories—agents containing our AI agents, app for the user-facing application layer, and shared for common resources and the A2A protocol implementation. This organization makes it clear how different components relate and enables teams to work on different agents independently.

The tutorial dives deep into the A2A protocol implementation, showing you the data models that define how agents communicate according to the A2A specification. You'll see how agent cards work—these metadata structures that describe an agent's capabilities, skills, input and output modes, and how to reach them. We implement the JSON-RPC 2.0 messaging layer that A2A uses for communication, including request and response structures, error handling, and task management.

Then we build each of the three specialized agents. For the equipment monitoring agent, we show how to wrap existing OPC UA data access functions as LangChain tools and implement an A2A server that exposes these capabilities. The material calculating agent follows a similar pattern but connects to the database instead. The orchestrator agent is more sophisticated—it discovers other agents through the registry, dynamically creates tools based on discovered capabilities, and implements delegation logic that routes requests to appropriate specialists.

The demo at the end brings everything together in a compelling way. You'll see the user application connecting directly to individual agents to query them, then switching to communicate with the orchestrator for complex queries that require multiple specialists. You'll watch the orchestrator receive a production feasibility question, delegate to both the material calculating and equipment monitoring agents simultaneously, receive their responses, synthesize the information, and deliver a comprehensive answer with full reasoning.

What You'll Learn

By following this tutorial, you'll understand the fundamental principles of multi-agent systems and why specialization improves both system design and agent performance. You'll see how the Agent-to-Agent protocol enables standardized communication across different frameworks and implementations, making your AI ecosystem genuinely interoperable rather than locked into specific vendors or technologies.

You'll gain hands-on experience implementing A2A from the ground up, including agent cards for capability discovery, JSON-RPC messaging for standardized communication, task management for coordinating work across agents, and registry systems for dynamic agent discovery. This deep implementation understanding means you'll know not just how to use A2A but how it actually works under the hood.

Perhaps most importantly, you'll learn architectural patterns for decomposing monolithic systems into specialized components that collaborate effectively. This is a crucial skill that extends beyond AI—it's fundamental to building scalable, maintainable systems of any kind. The tutorial demonstrates how to think about boundaries between agents, what responsibilities each should own, and how to coordinate across these boundaries through well-defined protocols.

You'll also see the practical reality of building these systems, including handling timeouts, managing asynchronous communication, debugging multi-agent interactions, and ensuring agents fail gracefully when other agents are unavailable. These are the real-world concerns that separate proof-of-concept demos from production-ready systems.

Watch the Full Tutorial

The complete video tutorial provides a detailed, step-by-step walkthrough of building this multi-agent system with A2A protocol implementation. You'll see the actual code for all three agents, the A2A protocol implementation, the discovery and registry systems, and live demos showing agents communicating and collaborating to make production decisions.